Instagram, which victimized its users due to a brief crash last week, is taking new steps to protect them. The company, which punishes those who disturb people on Instagram, is turning to more deterrent penalties when it comes to blocking users.

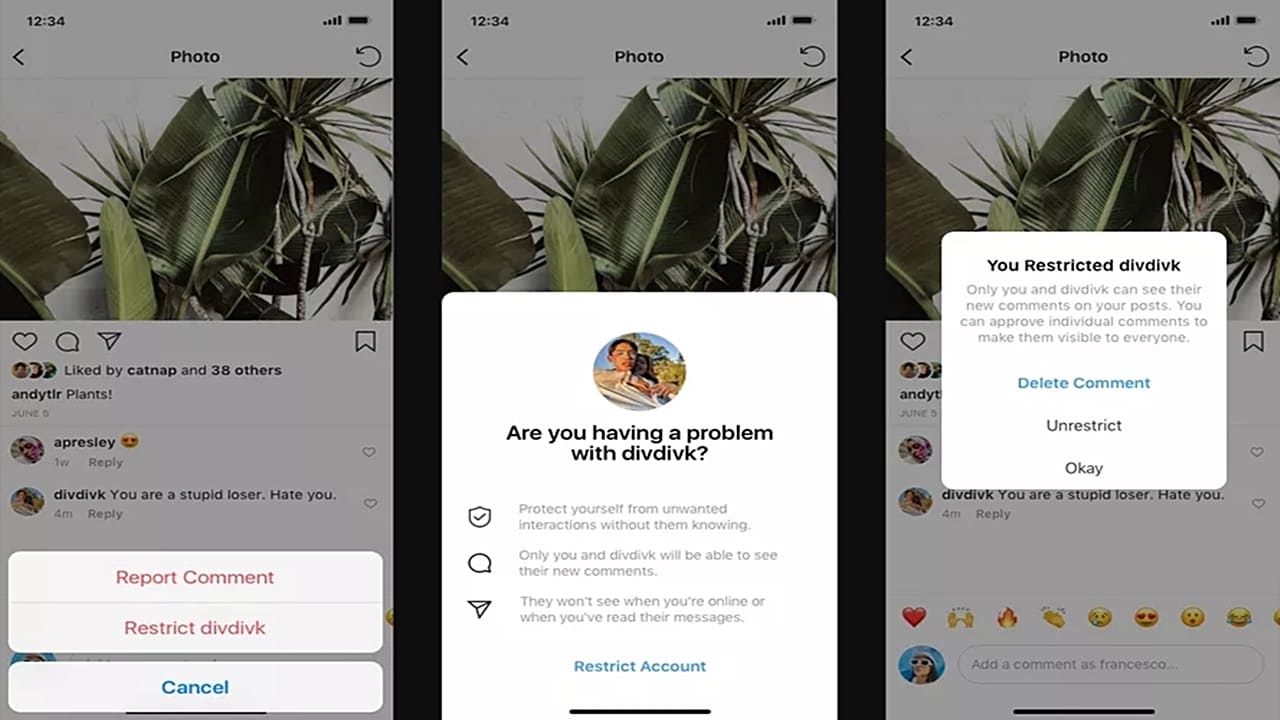

The victimized user will be able to punish the user who victimized him/her with the new artificial intelligence developed by Instagram for cyber bullying. In fact, the existing “shadow ban” system will now be available to individuals as well. Shadow banning prevents individuals who use more tags than necessary and share content that disturbs communities from communicating with other users, and then the posts of the relevant people after the ban are not visible to anyone.

Instagram imposes a large penalty on both commenters blacklisted by artificial intelligence and those blocked by victim users. Now the user will not be able to show the comments of a restricted user to anyone other than himself. In other words, if someone restricts your account, the comment you make on a photo of that person or the content you share about them will be visible only to you and that user.

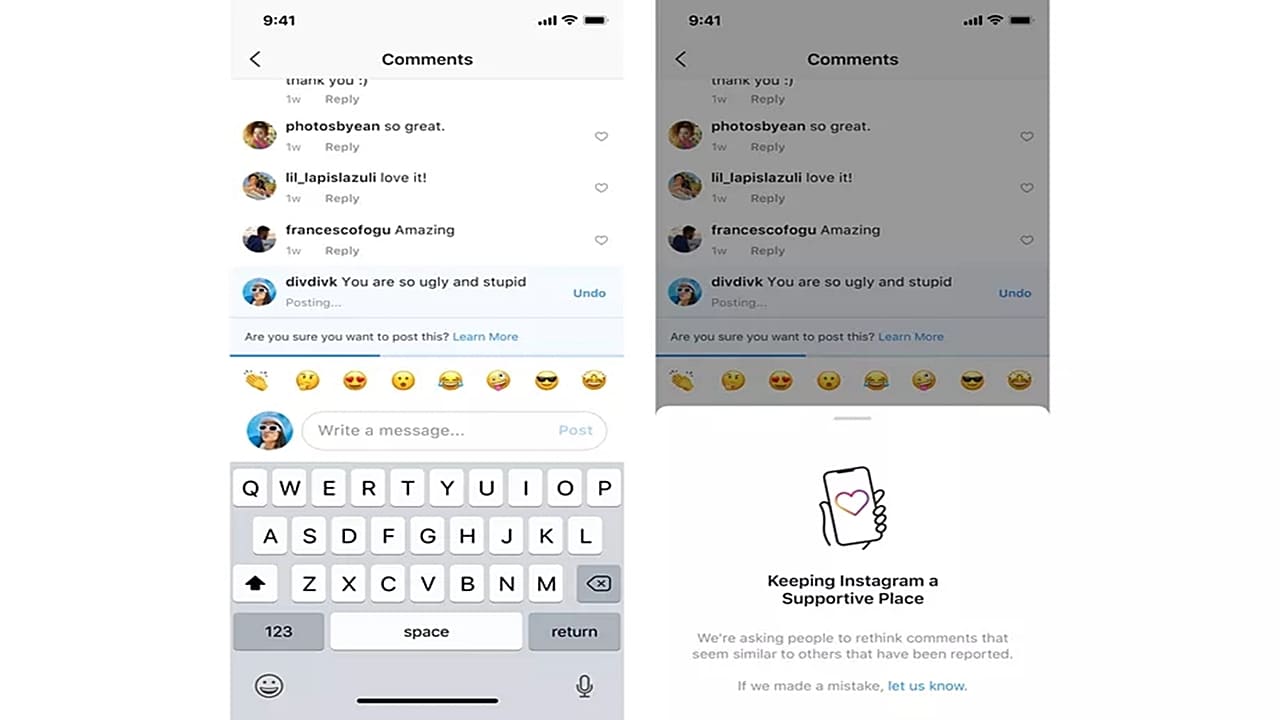

In addition, the restricted person will not be able to directly learn when the account owner who penalized him is active on Instagram or whether he has read a message. In addition to all these, Instagram also implements other security measures. The American company is coding artificial intelligence to detect and sanction people who write offensive comments. This virtual brain will memorize users who disturb other users with their comments. Listed offensive commenters must ask “Do you really want to post this comment?” before writing a comment. You will be presented with a warning question such as: Thus, they will be given the opportunity to take back their comments.

The restriction method, which is currently in the testing phase, aims to minimize verbal or visual harassment.

Stage Media

01-01-1970 02:00:00